All AI Applications Start with Unified Data

There’s a growing concern for businesses of every size and industry – how to set up a data architecture without breaking the bank that can actually deliver the insights needed to realize business outcomes. Hitachi Solutions data experts have been supporting businesses in their data maturity journey for years, and data architecture plays a starring role in our history of determining that next step for our customers.

Data warehousing techniques continue to evolve as new technologies emerge — such as AI and machine learning — and data analytics continues to drive business success. Growing requirements around data privacy and security also continue to push data warehousing to adapt and change. In the end, the goal of data warehousing is to operationalize business intelligence, providing business analysts and data scientists with fast access to any data type with a variety of computing tools and platforms.

If we agree that data is at the center of every application, process, and business decision, which in turn creates the ultimate challenge, managing all that information — everything from storage and access to security and compliance – it only makes sense that a modern data management platform that “uses” all the data an organization owns is the nirvana state. Not convinced? Read more from our experts: How to Approach Building a Modern Data Platform Now.

The way we were — a brief history of data warehouse approaches

Data warehousing emerged in the 1970s as businesses began to adopt computerized systems and realize the value of collecting and analyzing large amounts of data. Built by tech giants like IBM and Teradata, and using proprietary hardware and software, these early systems were expensive and difficult to maintain, limiting their adoption to large enterprises.

As we entered the 1980s, and volumes of data continued to grow, two new approaches to data warehousing arose. The first, led by Bill Inmon, is known as the “Top-down” approach. It involves a centralized data warehouse to store all the data for an organization in a normalized form. This approach focused on integration and consistency, with the goal of establishing a single source of truth for decision making. Inmon’s methodology emphasized the importance of data modeling, ETL (Extract, Transform, Load) processes, and data governance and incorporated some of the core tenants we still use today. However, the relational nature of this model made it difficult to manage data at scale.

Ralph Kimball, on the other hand, developed the “Bottom-up” approach to data warehousing, which addressed this issue. Kimball’s methodology emphasized building data marts, which were smaller, and more business/department centered. These data marts were designed to be flexible and easy to maintain and were built using a dimensional modeling approach. This method highlighted data accessibility and user-friendly interfaces, with the goal of making data analysis easier and more accessible to business users.

Through the 1990s and 2000s, the Inmon and Kimball methodologies merged and evolved to create a more comprehensive hybrid approach that includes a scalable, flexible, and sustainable data architecture that can adapt to the changing needs of an organization. This made data warehousing much more mainstream. And as more and more companies began to embrace the importance of data-driven decision making, the introduction of online analytical processing (OLAP) and data mining tools made it easier to extract insights from data. The rise of big data and cloud computing brought new challenges and opportunities for data warehousing as well.

These traditional data warehouse approaches have solved data problems for a long time, but in today’s digital world, they simply can’t fulfill the diverse requirements of modern workloads. Organizations face increasing pressure to manage many different types of data and at a scale that was previously unheard of. Data lakes were built to alleviate this pressure; however, they still lacked openness and created data swamps. Lakehouses are the next generation of data solutions and support the new field of data science — which handles a myriad of unstructured data types and huge volumes of data.

Why Get Excited About Microsoft Fabric

In plain English, what we’re seeing now is the next evolution and consolidation of data architecture – which ironically isn’t primarily driven by a gap in technological capabilities. Rather, it’s a need to extend the capabilities of data & analytics solutions to a broader audience. Enter Microsoft Fabric.

Microsoft Fabric is intended, in typical Microsoft fashion, to create an environment that is accessible and can be effectively democratized to business users of all types, including those that don’t have deep technical understanding. Microsoft Fabric breaks down silos that have grown up around the functions involved in the end-to-end processing of information that ultimately should allow organizations to make better, more informed decisions.

How we work with customers today to achieve their goals, leveraging the technologies available begins with a review of their Azure platform and covers both technical and non-technical considerations for analytics and governance in the cloud. To guarantee their projects are delivered and deployed in a governed, secure, and scalable way, across all levels of their organization ensuring scale, cost, security, and compliance (HIPPA, SOX, GDPR, etc…), can easily require a three-to-six-week cloud-scale analytics engagement.

Next comes the one-to-two-week infrastructure deployment to install, configure, deploy, and test to ensure the customer has consistent, proven architecture, aligned with Microsoft and industry best practices, to support their interconnected systems and data-flow requirements.

With the foundation set, the ingestion of the customer’s source-system data into a Data Lake is the next step. This step, easily one-to-four weeks based on the customer’s data volume, is a foundational task to drive business value from the ‘use’ of the company’s data.

At this point, all the “platform” work is completed, and focus on the customer’s end goal, their data warehouse, begins – and depending on the scope, it could be a many-week engagement.

After gathering and finalizing the customer’s needs on a single subject area, we’ll replay back their expectations through industry-standard documentation, such as data model diagrams created with Visio. Moreover, we’ll mockup these data models in Power BI and demo them to the customer to prove that they will, indeed, functionally meet their requirements. Once the data models (aka “star schemas”) have been created in a database (which is the bulk of the effort), we’ll load them to a Microsoft Power BI dataset and layer business semantics or “measures” on top of them. It’s at this point we can perform UAT/QA testing and receive sign-off from the customer that the measure calculations are correct. Finally, we’ll equip the customer with the knowledge to own and operate everything we’ve created, before repeating the entire process with a new subject area.

Jumpstart Your Adoption of Microsoft Fabric

It’s not difficult to see how Microsoft Fabric is a game changer. What may be less clear is how it can accelerate your data journey.

Get started nowWhere Are We Going

Microsoft Fabric is the next power tool, ultimately reducing what was previously a very complex technical approach to a single sign-on experience, with built-in integration, providing a much broader audience across your enterprise access to ALL your data for their reporting needs. Think “DIY” data expert for the less technical audience where Microsoft Fabric supports your business users to stay where they work (Microsoft 365), yet obtain and share critical insights without the cost and complexity required to do so in the past.

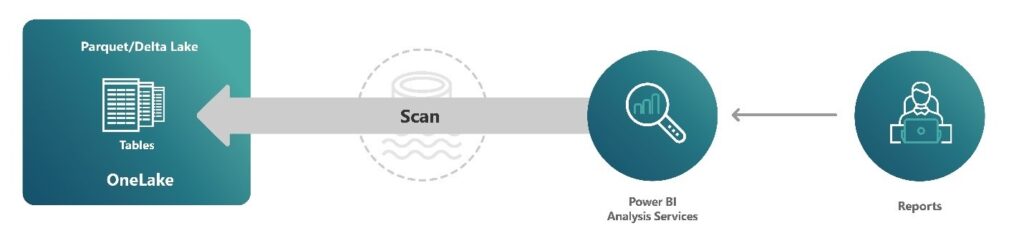

Sounds too good to be true? Perhaps…. but it is real. With Microsoft Fabric, all your compute engines will store their data automatically in OneLake in a single common format. Once data is stored in the lake, it is directly accessible by all the engines without needing any import/export, empowering every Microsoft Office user with the ability to leverage the insights stored. In the end, this is a MUCH FASTER path than the steps required today, as outlined above.

| Let’s break it down… |

| “Platform” This word has become somewhat of a commercialized term, however, with Microsoft Fabric, we are entering into a new realm where all the components truly are delivered in a complete, integrated, single offering for end-to-end analytics. |

| “End-to-end” Yet another often-used term will become known for Microsoft Fabric’s ability to embrace the many elements that make up a platform, from the tech and security to the governance and ways business users consume information to perform their job – again, a complete, single offering. |

| “Unified” Always an aspiration of any business, unifying data across an enterprise is now more easily achieved with Microsoft Fabric’s modern data warehouse approach, providing a robust set of features and capabilities to support collaboration, sharing, and analysis to support the business through a fully interactive query experience. AKA: Faster time to value and consumption of your data. |

| “OneLake” Not to get too deep into tech-speak, OneLake becomes the true hub-and-spoke data mesh for your organization. And if the concepts of Data Fabric and Data Mesh are not familiar, our team of experts has been helping customers capitalize on the true business value of their data for years. Interested in knowing more? Read our blog: Data Fabric and Data Mesh: Does the Future Need You? |

| Microsoft Fabric, or for fun, “See-through Mode” Sounds new-age, doesn’t it? In simplistic terms, the reason this is so cool is that technology is now available to truly stream data in real-time – data that is constantly refreshed to make your reporting more accurate and up to date. It’s been a journey, remember the “real-time” data access that was real-time, but slow; then the faster, but latent and duplicative leg of the journey; to next up – the perfect combination of quick and secure accessibility. |

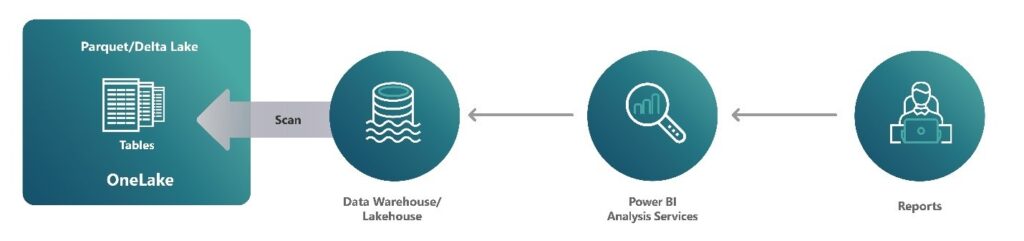

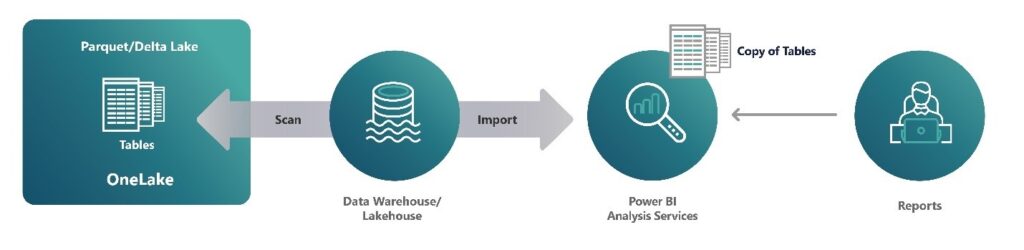

It looks like this:

Direct Query Mode

Import Mode

Microsoft Fabric

From data warehouse management to business user reporting, Microsoft Fabric will allow your organization to realize “one place for all data” and be the vehicle to deliver rich analytics.

JUMPSTART Your Adoption

It’s not difficult to see how Microsoft Fabric is a game changer. What may be less clear is how it can accelerate your data journey. Microsoft Fabric is not yet generally available, but waiting will put you further behind in reaching your potential.

Hitachi Solutions is helping customers GET STARTED NOW – with Microsoft Fabric public preview – to accelerate your organization’s understanding and adoption of Fabric’s capabilities with the development of a clear path to success for how your business can benefit.

Hitachi Solutions’ technical experts will create a sandbox environment in your tenant which includes Microsoft Fabric and Hitachi Solutions Empower Data Platform working in tandem to accelerate cloud-enabled data workloads. At the same time, our experienced advisory consultants work with you to outline a path for your organization to quickly adopt Fabric into your business processes once it becomes generally available.

THIS is how our team of business solutions and technology experts will help you realize business value – and jumpstart your adoption with a clear path to success. Hitachi Solutions has expertise across Microsoft’s entire stack of technical solutions and are 100% focused on Microsoft. This is not a subset of our R&D time and effort – it is all of it. As we were an early and active participant with Microsoft during Fabric’s private preview, our Empower Data Platform is built to streamline your infrastructure, power your data ingestion, and is a natural ally to optimizing your Microsoft Fabric strategy.

Why Partner with Hitachi Solutions

Our team of experts, who have been working alongside Microsoft under NDA, have gained a deep understanding of Microsoft Fabric through our involvement in developing and testing the platform. That experience, combined with our deep expertise in data warehouse technologies and Microsoft focus, is how we are well-positioned to help you harness the possibilities for your business.

We are People-first, Experience-led, and Customer-driven.

Hitachi Solutions’ mission is to work with our customers to solve their business challenges through flexible, scalable, and supportable digital strategy that is focused on business success.

We solve business issues by developing a deep understanding of what makes your company work, and by exploring how we can make your business better through specific solution sets that work for everyone in your operation.

From best-in-class databases and analytics to governance and security, all things data continue to drive digital transformation initiatives across companies large and small. Hitachi Solutions provides our customers with a broad array of solutions — from unified, modern Azure-based data platforms to turnkey, SaaS-based models that reduce development and capital costs — all created to turn data into predictive and analytical power.

Our goal is to align technology with people and processes to solve business challenges. We help our customers innovate when they need, as well as partner and advise them on how to choose the right solutions for their evolving business. We test and transform through change enablement and ongoing, flexible support to realize the full value and return for digital strategy and technological investment.

Jumpstart your adoption of Microsoft Fabric – GET STARTED with our experts today!